Christophe Waelchli, Product Manager, onsemi

The modern hearing aid could not exist without the massive leaps made in semiconductor manufacturing technology over the last several decades, as well increasingly sophisticated system architectures. It is easy to chart the way processors have evolved over that time by the number of integrated transistors they feature. The first personal computer (PC) processors had tens of thousands of transistors and were built using semiconductor processes with feature sizes in the multiple micron-meter range. Today, these processors have tens of billions of transistors and are built on technology nodes that are thousands of times smaller.

This level of integration doesn’t just benefit the PC industry, of course. The entire semiconductor industry has access to this ever-evolving technology, allowing manufacturers in all vertical markets to make massive strides forward. Specifically, the hearing aid market has benefited from multi-core parallel processing architectures that exponentially increase processing power, minimize clock cycles and dramatically reduce power consumption. Such cores are able to process highly sophisticated algorithms, including artificial intelligence (AI).

If we consider the electronic hearing aid, the first significant development was probably marked when manufacturers began implementing frequency based non-linear amplification. This consists of applying amplification to each frequency band based on the level of energy contained in each band. In each frequency band, loud noises would be attenuated and softer ones would be amplified, which brings high level of sound quality and customization to hearing aid users. Non-linear amplification requires significant processing power so that it can be applied in the shortest amount of time to minimize system latency. This is enabled by the specialized system level design that goes into the ultralow power, highly parallel cores at the heart of electronic hearing aids.

Generally speaking, on top of allowing efficient non-linear amplification, the introduction of digital signal processors (DSPs) allowed hearing aid manufacturers to add features such as feedback cancellation that were not previously possible while reducing system power. The audio signal could now be manipulated in entirely new ways using digital filters that are even more selective in terms of the frequencies – and therefore the type of sounds – that are processed. The integration of more on-chip memory also brought the ability to implement new modes of operation, such as adapting the way audio signals were processed based on the source. For example, hearing aids could now adapt to the wearer beingin a crowd, at a party or in a large stadium. Different modes allowed the hearing aid to adapt to its environment and prevailing conditions, using algorithms that could change the filters in realtime.

Today, digital hearing aids are still based on this approach, but the addition of wireless connectivity has increased their scope of operation. By equipping hearing aids with the ability to wirelessly connect to other devices, it became possible to stream audio between them and control the hearing aid via a smartphone app instead of fumbling around behind one’s ears for push buttons and volume controls on the hearing aid.

Typically this is implemented using Bluetooth® technology to connect the hearing aid to a consumer device, such as a TV or cell phone. Once connected to a cell phone, the possibilities continue to expand. Using an application running on the cell phone, the user could gain greater control over the modes of operation. The combination brings digital hearing aids into the realm of Internet of Things (IoT).

A more recent development and, again, one that is largely enabled by continued levels of integration, is the introduction of AI. By putting AI into the hearing aid, it becomes even more able to react and adapt to the user’s requirements, their preferences and the prevailing conditions.

Higher integration for next-generation hearing aids

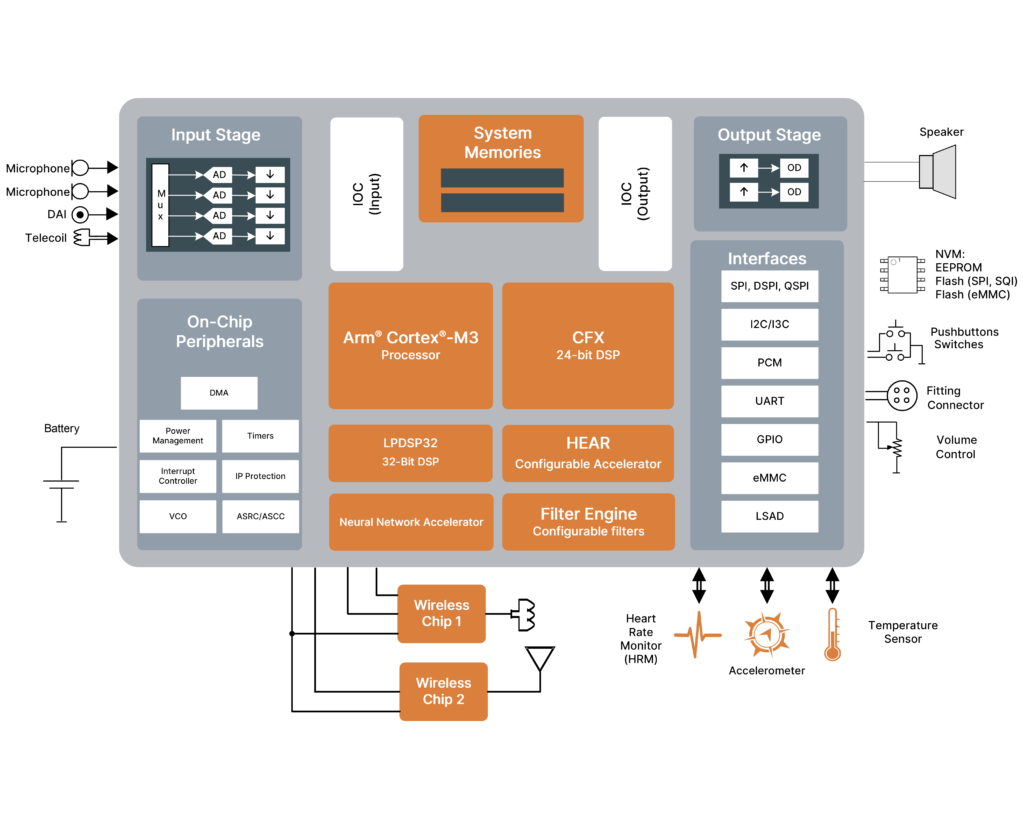

The latest example of how integration is facilitating the next generation of AI-enabled hearing aids and hearables comes with the EZAIRO®8300 high-end audio DSP from onsemi™ (refer to Figure 1). It integrates six processor cores, including a neural network processor dedicated to accelerating AI functionality. This 6th generation DSP leverages onsemi expertise in system level audio processor design to produce a device that delivers twice the processing power of its predecessor, the Ezairo 7100, while achieving lower power consumption.

The open programmable DSP-based system offers best-in-class performance, in terms of MIPS/mW, while still allowing manufacturers to implement their own custom software-defined functionality. This is thanks to the way each of the processing cores has been designed, allowing the same chip to be used in both hearing aid and hearable applications, each with their own requirements.

Programmability lies at the heart of this flexibility and is supported by the six processing cores. The CFX DSP is a highly cycle-efficient core based on a 24-bit fixed-point, dual MAC and dual Harvard architecture. This is complemented by an Arm® Cortex®-M3 core with subsystem. Typically, either the CFX or the Arm Cortex-M3 processor could act as the main controller on the Ezairo 8300.

Alongside these two general purpose cores are four additional processors dedicated to accelerating specific tasks. This includes the HEAR configurable accelerator, which is a signal processing engine optimized for supporting advanced algorithms such as dynamic range compression, directional processing, feedback cancellation and noise reduction.

The programmable filter engine is used to apply pre- and post-processing filtering, such as Infinite Impulse Response (IIR), Finite Impulse Response (FIR) and biquad filters. The filter engine features an ultra-low-latency signal path to minimize delay in applications that implement active noise cancellation, or when occlusion management is needed. A subsidiary 32-bit fixed-point dual MAC, dual Harvard core, the LPDSP32, is also provided for further compute-intensive operations.

The neural network accelerator (NNA), which is also configurable, is dedicated to supporting the implementation of neural networks on the System on Chip (SoC). Edge processing is becoming widely adopted by manufacturers looking to exploit the power AI without incurring the latency and power penalties of offloading all of the processing to cloud platforms. The NNA contains 16 multiply/accumulate blocks with input and coefficient registers, with support for coefficient compression/decompression and pruning. All of the processing cores, including the NNA, are supported by software libraries to provide manufacturers with the tools they need to develop their next generation of digital hearing products.

The SoC implements clock and voltage scaling, to reduce power consumption when the full processing power isn’t required, thereby helping to extend battery lifetime. The high-fidelity audio system integrated in the Ezairo 8300 audio DSP has a dynamic range of 108 dB and sampling frequency of up to 64 kHz. A total of 1.4 MB of memory, large enough to run a full and sophisticated application that processes audio, hearing enhancement and biometric tasks for the most advanced hearing aid without external support, is also integrated and available as a shared resource for all processing cores. Security is also provided, allowing manufacturers’ embedded software to be encrypted when stored in external memory.

A number of interfaces are included, comprising two PCM, three I2C, two I3C, two SPI, one UART, one eMMC and up to 36 GPIO interfaces, as well as eight inputs for the low speed ADCs on-chip. The I3C bus is based on the Improved Inter-IC specification developed by the MIPI Alliance, designed to enhance sensor system design in mobile wireless products. The audio path supports higher dynamic range and sampling frequencies, with a configurable input stage that can support up to four microphones and an output stage with support for two speakers.

New opportunities for hearable technology

As well as digital hearing aids, the Ezairo 8300 audio DSP is applicable to the emerging product group referred to as hearables. This includes hearing aids available without a prescription (over the counter), as well as earbuds. The differentiation between these products is becoming increasingly thin, as hearing aids provide more value-add features such as streaming audio from personal devices including phones and tablets.

The opportunity for adding value extends greatly with hearable technology, to include live translation, dictation and navigation services. In fact, anything that can be delivered audibly through a cellular device could be provided through hearable solutions. As it becomes possible to integrate other technologies, such as MEMS motion sensors, into these wearable, hearable devices, we can expect the user interface paradigm to be redefined.

Conclusion

Hearing aid technology is constantly evolving, driven in part by the developments in semiconductor manufacturing technology but also novel system architecting that enable to do more while consuming the same power. By adopting an advanced 22nm process for the Ezairo 8300, ON Semiconductor has produced an advanced SoC capable of powering the next generation of advanced hearing aids and hearables.

Author