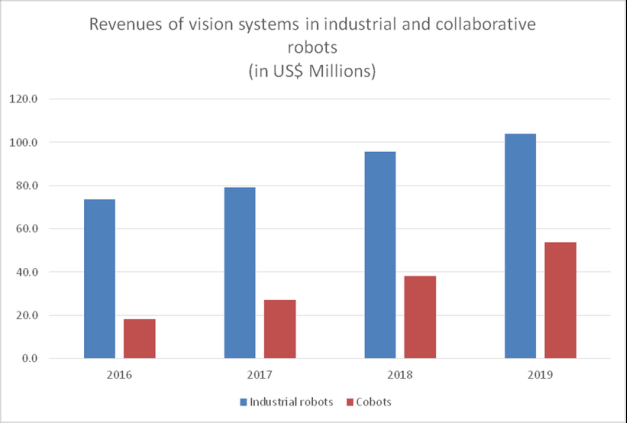

Innovation in robotics is moving ahead at a fast pace, spearing ahead the expected proliferation of robots in new and existing applications. Deploying sensors in robotics allows for the creation of robots that can “see” and “feel”, in a biomimetic way, like humans do. These sensor enabled, advanced robots are now able to undertake more complicated tasks and are being deployed into industrial, commercial, domestic, logistic and other sectors where robot penetration was previously limited. The market for robotic vision and force sensing alone is expected to reach over $16.1 billion by 2027, as described in the newly launched IDTechEx report Sensors for Robotics: Technologies, Markets and Forecasts 2017-2027. The graph below is a plot of the forecasted short term growth of the value of vision systems deployed in industrial and collaborative robots, which represent only a segment of robotic systems impacted by the development of sensing platforms with extensive capabilities.

Innovation in robotics is moving ahead at a fast pace, spearing ahead the expected proliferation of robots in new and existing applications. Deploying sensors in robotics allows for the creation of robots that can “see” and “feel”, in a biomimetic way, like humans do. These sensor enabled, advanced robots are now able to undertake more complicated tasks and are being deployed into industrial, commercial, domestic, logistic and other sectors where robot penetration was previously limited. The market for robotic vision and force sensing alone is expected to reach over $16.1 billion by 2027, as described in the newly launched IDTechEx report Sensors for Robotics: Technologies, Markets and Forecasts 2017-2027. The graph below is a plot of the forecasted short term growth of the value of vision systems deployed in industrial and collaborative robots, which represent only a segment of robotic systems impacted by the development of sensing platforms with extensive capabilities.

But why are we experiencing such fast adoption at this point in time?

Revenues of vision systems in industrial and collaborative robots

Software advances hand in hand with hardware innovation

Early robots were limited in performing tasks under highly organized conditions, with increased safety measures, due to their limited perception of any changes in their operational environments. Applying Artificial Intelligence (AI) concepts in robotics, allows for reducing these limitations. Performance optimization through robotic sensing is allowing for decision making capabilities of new generations of robots based on processing of sensor data (such as visual, tactile etc.) gathered from their operational environment. Data-driven task performance is allowing for higher precision even under conditions of increased randomness. In essence, robots with increased awareness are becoming better at performing the actions they are tasked with and capable of performing additional actions, previously thought of as too complex for robotic systems. These improvements are enabling robot deployment in more demanding application spaces, a key explanation for the expected accelerated proliferation of sensor driven robotic systems.

Key enablers of this robotic revolution can be found in both software and hardware development efforts that have allowed for the creation of advanced sensor platforms and processing algorithms, along with intuitive, user friendly interfaces.

Henrik Christensen, the Executive Director of the Institute for Robotics and Intelligent Machines at Georgia Institute of Technology in Atlanta, Georgia, sees tremendous potential for robots coupled with such capabilities, especially as prices are coming down. He said back in 2015: “We’re getting much cheaper sensors than we had before. It’s coming out of cheap cameras for cell phones, where today you can buy a camera for a cell phone for $8 to $10. And we have enough computer power in our cell phones to be able to process it. The same thing is happening with laser ranging sensors. Ten years ago, a modest quality laser range sensor would be $10,000 or more. Now they’re $2,000.”

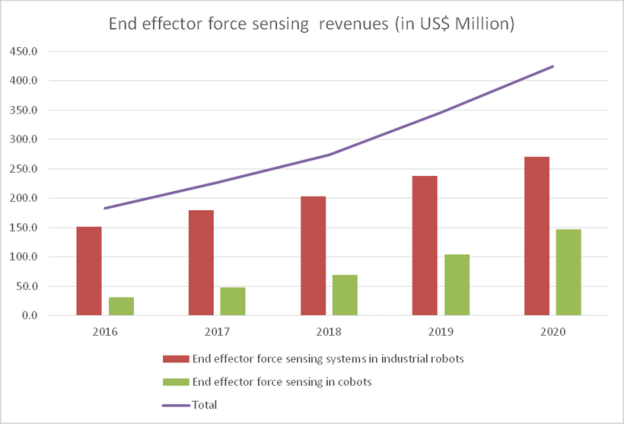

All in all, machine vision and force sensing enable the design of more versatile, safer robots for a wider range of applications. Of course, different sensing systems fit different application spaces, hence, the variety of robots under development each have specific requirements and sensor platforms with the right type of features.

End effector force sensing revenues in industrial and collaborative robots

All this and much more is discussed in more detail in the newly launched IDTechEx report Sensors for Robotics: Technologies, Markets and Forecasts 2017-2027. Some information will also be shared in the IDTechEx webinar on the topic due to be broadcast live on the 7th of February 2017. For more information please contact the report’s author Dr Harry Zervos, principal analyst with IDTechEx Inc. at h.zervos@idtechex.com or Alison Lewis at a.lewis@idtechex.com