In 2022, the global hyperscale computing market will continue its meteoric rise from about $147 billion last year with a projected revenue CAGR of 27.4 percent through 2028, according to a recent report by Emergen Research. Key growth drivers are numerous and include increasing cloud workloads, data center optimization, social media platforms, and the emergence of data-as-a-service.

During this time, companies designing power semiconductors into their hyperscale computing platforms will face the following challenges that must be resolved in order to drive continued revenue growth.

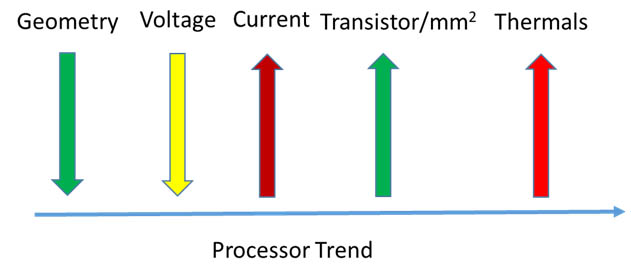

With reference to the image above, thermal management challenges dominate with an increase in processor currents. Two ways to address this problem are:

A) Increase the distributed source power rail: i.e. 12V to 48V

B) Place current multipliers (voltage dividers) in close proximity to the load to reduce the distance power travels

These techniques decrease I2R losses, which in turn significantly reduces thermal management overhead. A reduction in thermal management results in better usage of available source power (FLOPS/Watt).

There are three High-Performance Computing (HPC) applications that each have their own particular set of needs – artificial intelligence and machine learning (AI/ML), supercomputing and hyperscale computing.

The goal of AI/ML is to make meaningful analysis models (training) from a massive amount of data. This has to be done predictably, repeatedly and with ultra-low latency. Specialized processors and computing systems have been developed to achieve this goal. Examples are Nvidia DGX and Cerebras CS-2 systems. These platforms use very high-performance processors that were manufactured on a 10nm process and are now moving to 7nm and even 5nm.

Core voltage rails for such processors are in the range of 0.8 to 0.66 volts, and are edging toward 0.34 volts with steady state currents >1kA and peak currents >2kA.

At such high currents, power delivery becomes extremely important in managing I2R losses. While lateral power delivery with current multipliers in close proximity to the load help in reducing PDN up to 500A of load currents, vertical power delivery further reduces PDN for loads by >1kA.

For hyperscale cloud computing datacenters, two main design influencers are total cost of ownership and power usage efficiency. Using 48V distribution in the racks not only reduces rack floor space but also uses scalable 48V battery backup systems that eliminate large uninterruptible power systems. All of these results in higher rack density, which equals higher compute capacity and better total cost of ownership.

Prediction #2: Data Center Operators Will Continue the Debate Between Adopting AC or DC Power

Few enticing benefits of DC distribution include elimination of large AC-DC UPS systems, and not having to worry about compute load distribution. However it also has its own quirks like any human exposure to high voltage DC is unforgivable at best, besides limited high voltage DC engineering talent pool availability and lastly finding multiple vendors who offer high voltage DC eco system components (fuses, high power disconnects etc.). Modern datacenters use the most common approach of bringing 3 phase AC to the building and then split them into three single phase AC lines backed up by their own UPS systems. This architecture not only calls for higher upfront infrastructure cost, but also a need to ensure even distribution of workload on all three phases. Besides that, there are plenty of talent pool, and a well-established eco system of vendor partners with plenty of expertise in terms of executing large-scale data center projects. It is prudent to note that, irrespective of one’s choice of AC or DC distribution, eventually all electronic loads work on low voltage DC. Hence optimizing the last mile with 48V DC distribution vs. the typical 12V DC distribution renders huge benefits in terms of power loss reduction. Furthermore by means of using direct 48V to high current PoL rails further reduce power losses resulting in a much larger reduction in thermal management overheads. This combination plays well in terms of using most of the available input power to deliver high density computing catering to a better T.C.O.