The Internet of Things (IoT) has been a transformative force in the world of technology, connecting an ever-expanding array of devices and sensors to the internet so. However, to truly unlock the potential of IoT, it needs intelligence, and that’s where Edge AI comes into play.

On December 6th, 2023 leading semiconductor company STMicroelectronics has announced the launch of an Edge AI Suite which enables companies to embed AI-enabled ST products across various industrial applications.

In this context, Electronics Maker got the opportunity to join recent media briefing presented by Matteo Maravita, Senior manager, AI Competence Center and Smartphone Competence Center, Asia Pacific Region, STMicroelectronics discussed on ST’s vision, developer challenges, Edge AI solutions, future products and how company helps to make Edge AI a reality.

- How is Edge AI becoming a transformative technology in many applications?

AI is really a transformative technology that is really changing many things. We see it as essential to building a future connected world, a world of smart everything where billions of things are more secure, more connected and more intelligent. We call this as Cloud Connected Intelligent Edge. We believe that these devices will be much more autonomous and at the same time, all these things will be more connected to the cloud, increasing not only the creation of data, but also increasing the local processing of these data. All these devices cover several areas that we find in our daily life. And we can find them at home, in factories, in workplaces, in cities and buildings, and in mobility solutions. When we look at this wide variety of devices and if we think about the related business model, we can understand how edge AI is going to be impacting our future a lot.

- How can ST seize the innovative opportunities of edge AI?

STM32 edge AI solutions make devices smarter and more energy efficient, improving the user experience and opening the door to many new application possibilities. We offer user-friendly online tools and software that help embedded developers create, evaluate, and deploy machine learning algorithms on STM32 microcontrollers and microprocessors in a fast and cost-effective way.

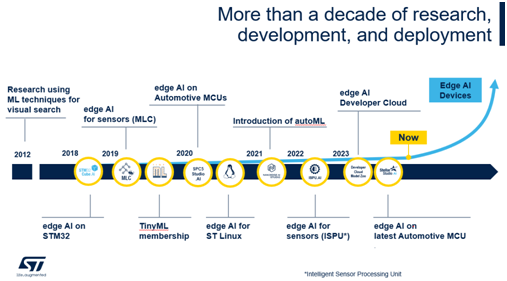

We’ve been investing for more than 10 yrs in the R&D for Edge AI technology. We now have an extensive SW offering and soon to come accelerated MPU and MCU. We’re currently supporting many of our customers in the deployment of the edge AI based solution. In parallel, we are leveraging the opportunities by strengthening our relationship and our partnership with customers and ecosystem partners, and by being deeply involved in the technical support, also when the customer is creating a proof-of-concept demo. So this is allowing us to help the customer in innovating.

And obviously, this is helping us also to expand our business by giving the opportunity to sell a wide variety of products to our customers, from not only the microcontrollers, but also the sensors and all the devices that are present in the platform, and to really create an ecosystem that is both helping the customer and linking them to ST when they think about embedded AI.

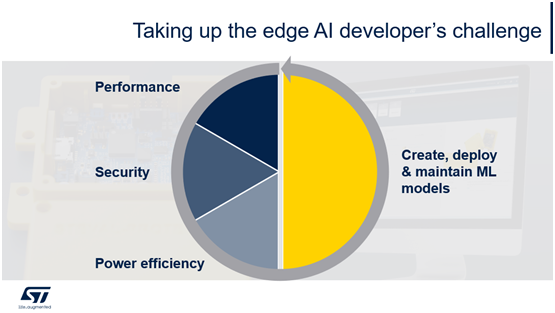

- What are the challenges for developers working on Edge AI?

There are some hardware and software challenges for the developers. On the hardware side, they need to ensure the overall performance of the application while considering security features and keeping power consumption low. As a semiconductor supplier, STMicroelectronics addresses these hardware challenges by providing a wide range of hardware devices tailored to different application needs. On the software side, developers must create, train, deploy, and maintain machine learning models within the final products, presenting a significant challenge for those addressing edge AI. STMicroelectronics is actively involved in assisting developers with hardware and software challenges, offering comprehensive solutions to support their edge AI endeavors.

- What do you say about STM32 a de facto platform for embedded AI?

Our STM32 is the number one solution in term of number of projects that have been submitted in the ML Perf Tiny benchmark, 73% of all the projects submitted are based on STM32. This is telling you how our commitment over 10 years is paying off and we see a huge number of developers that are developing and exploring edge AI based on our platform. And I think that this is related to three main factors. The first point is our leadership with our general purpose MCU STM32 for Industrial and consumer application, our commitment as a contributor in edge AI benchmarks since the inceptions of them like the ML Perf Tiny benchmark. And last but not least, our online platform, the STM32 AI Developer Cloud, helped customers and developers to easily test their models on a wide variety of STM32 boards with our online tools.

We believe all this has encouraged widespread AI innovation on the STM32.

- What are the automotive opportunities for Edge AI?

Automotive is going to be a bigger opportunity for edge AI. The most popular use case in Automotive for AI is ADAS for autonomous driving. We can see AI is helping to avoid incidents and dangerous situations for whatever is happening outside of the car. When we move inside the car, several customers are already working on in-cabin monitoring. We are now detecting possible dangerous situations inside the car; you may think that we can monitor the state of, for example, sleepiness or the fatigue of the driver that can cause an accident. So we prevent it by detecting if the driver is sleepy and by raising an alarm to wake him up. We can move even more inside the car, and we may see edge AI very soon pervasive and in combination with the several sensors in the car. There may be different kinds of use cases. One possible example is about monitoring the state of health of the battery. But also we can identify possible anomalies in the system, both for mechanical and electrical, for example in combination with the use of MEMS accelerators to detect the vibration in the system.

- What is the idea behind introducing ST Edge AI Suite?

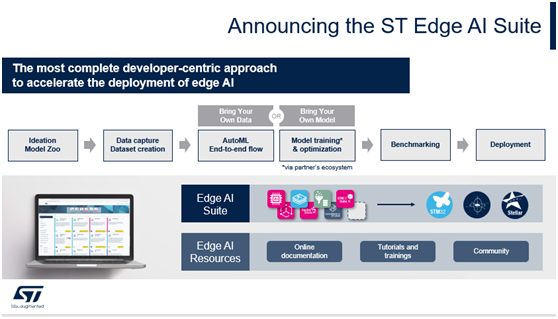

It is important that to develop edge AI solutions, we need to have multiple skills and we may have different profiles of engineers working on AI projects. All these engineers with a different profile have a different requirement. The embedded software engineer needs to be focused on the implementation on edge AI and how to integrate it in the overall system. So he may need to have a firmware project example to start with and to customize it for the specific application use case. The machine learning engineer, or AI engineer, or data scientist, will focus mainly on the development of the machine learning model. He not only needs to focus on the data set and the machine learning model, but also on the optimization of it for the specific hardware device that will be selected. The hardware engineer needs a simple tool to test the AI algorithm given by the data scientist on different hardware platform or different part numbers and to find the best compromise in term of performance, power consumption, size, price and so on.

So, you can understand that we want to have a unique tool in order to cover all the needs of these engineers. This is why we were very pleased to announce our new ST Edge AI Suite.

Basically In ST Edge AI Suite, we are putting all together all the different tools and building blocks that are needed both in the development, starting from the creation of the use case with the model zoo, the data set collection, and then port the model to the specific hardware. The AI model can also be created starting directly from data with our AutoML tool. As an alternative, our customers can also use in the tool their own models that they developed by themselves or from a third-party company for model bench marking and deployment. We want to have this tool to cover all the different hardware devices that I mentioned before, MEMS sensors, microcontrollers, MPUs and also the automotive microcontrollers. And you will find in the same ST Edge AI suite also the resources needed like documentation, tutorials, access to the community, and so on.

We are integrating several tools inside this overall comprehensive Edge AI Suite. This ecosystem is also compatible with the external ecosystems for the AI development. We can use machine learning models that have been trained with the most famous and widely used deep learning frameworks, like TensorFlow Lite, Keras, PyTorch and so on. We are compatible with NVIDIA TAO and we are also giving the possibility to be connected to cloud services like AWS and Azure. Furthermore we are compatible with the simulation tools like MATLAB.

The core of this edge AI solution is what we call the ST Edge AI Core. This is a core library with a unified common line interface that the customer can use to evaluate the model and then port it to the specific target device. We can use the tool with the STM32 microcontroller, the Stellar automotive microcontroller, and the MEMS sensors with hardware accelerators (MLC or ISPU).

On top of it, all these tools are free of charge. Not only the ST Edge AI, but also Nano AI Studio. We are very glad to announce that since last December our Nano AI Studio became free of charge with unlimited quantities on any STM32. And we keep the availability to support also other Arm Cortex-M based microcontrollers for our customers, under a special license agreement.

- Is the ST Edge AI Suite development kit compatible with all the STM32 MCU and MPU products?

This is exactly the purpose of the ST Edge AI Suite. For example, a model that has been developed based on data coming from the six-axis MEMS, and you can use the tool to translate it and to evaluate it in the MEMS sensors itself, in the STM32 microcontroller, or even to the MPU, and also in the automotive device. So you can test your model in a seamless way across all the possible different devices. Obviously, you just need to take care about the hardware constraints of the model. We cannot obviously run a big computer vision model on the MEMS. This is the only constraint.

- What opportunities and challenges will large models bring to edge AI?

Today we see LLM models (Large Language Model), and the mostly used is ChatGPT. It’s become very popular in the AI community and far beyond!. Still, these models are quite large, quite complex, and typically are still running on cloud services or on servers, or in any case, expensive hardware systems. We don’t see yet the LLM models running on edge AI devices like the ones that we are targeting as of today at ST.Of course this is a challenge our engineers are looking at in terms of R&D, there are even papers available on that specific point – for those who are interested there is a lot of ST research available on edge AI challenges, for the short and the longer term.

Obviously, we are keeping a close look at LLM, and the evolution of the so-called Transformers, that is the basic architecture at the base of LLM. We believe that soon in the future, smaller models, transformers or LLM, with similar performance may appear and that in the near future, together with the progress of AI accelerators, (perhaps in a few years) they may run also on Edge AI devices. So we are keeping an eye at it to make sure that when it will be the right time, we will have the right hardware for it.

- As more and more manufacturers develop edge AI, and in particular, endpoint AI applications—some of which require long-term power for always-on applications—what strategies can you recommend these device developers to ensure efficient, low-power, and intelligent device operations?

The first strategy, for sure, is to move some of the processing of the AI algorithms from the cloud to the edge. This is why we are focusing in ST on edge AI solutions. By moving the processing locally, it will have a tremendous impact on saving the power consumption of the system, increasing responsiveness, increasing security, and decreasing the overall cost of the solution.

The second strategy will be to select devices with integrated AI accelerators, and supporting SW tools. We mentioned before the NPU for the STM32 family, but we have also AI accelerators like the ISPU inside the MEMS. You can imagine that, for example, if you move your AI algorithm from an application processor to an MCU, for example, you are already saving a lot of power. And if you are able to move (when the model is small enough), again, your model from a microcontroller to a MEMS sensor, you can again decrease the overall power, moving from milliampere order to microampere order. So you can have your AI algorithm always working inside the MEMS sensor at extremely low power consumptions, keeping the overall system in shutdown, and wake it up only when needed.

- How does Edge AI differ from cloud AI? How is ST making innovations to make edge AI the future?

The key difference is where the AI algorithm is running. In Cloud AI solutions, you have algorithms that are running on a remote server. This kind of solution is very well fitting in situations where the AI model is quite big. We were mentioning before about LLM models, large language models, like ChatGPT, for example. They need to be run on the Cloud.

But there are many other use cases and applications where the machine learning model is small enough that it can run more efficiently on the Edge: as we have seen before, on the NPU, microcontroller, or even sensors. This is the so-called Edge AI.

This means the raw data are not sent to cloud but rather used to infer locally. This enables new applications with a better performance with ultra-low latency for real-time application, significantly improving security and at a fraction of the cost of performing the same operation in the Cloud. It would not be economically viable to bring the data and analysis to cloud for smaller industrial use cases and consumer industrial applications (could be viable for bigger ones in the cloud).

- As more and more large AI models are starting to be available in the edge market, what segments or edge markets are going to see huge growth in the near future?

We have seen that there is really a proliferation of use cases where AI is used. And Edge AI is going to be pervasive also with large scale models. So it’s difficult today to identify one specific application because there are really so many applications in the personal electronics, industrial or automotive areas that will get the benefit of edge AI.

We see this is especially key for smart buildings, asset tracking, and we see a very fast market adoption today for industrial applications to name just a few, with examples as: predictive maintenance, smart sensors can help anomaly detection in a motor or a fun which can help with significant optimization, problem detection early on as well as prevention. These improvements contribute to substantial productivity improvements.

We can categorize the benefits that edge AI is bringing to these applications in three categories from my point of view. The first category is an enhancement of the current performance. The performance of the product is getting better thanks to the AI technology. The second one is to increase the robustness to corner cases. Typically, when we are detecting something, we may have some corner cases where a standard algorithm may not work well, while AI algorithm can help on this. The third category is the introduction of new features and new functions that were not present before in the end product and that can help our customers to make their products more innovative and appealing.