Mark Patrick, Mouser Electronics

If you were to ask the National Highway Traffic Safety Administration (NHTSA) what causes more accidents than anything else, the answer would simply be “the human driver of the vehicle.” When they studied over 2 million road accidents, it was concluded that 94% were directly attributable to the driver. The greatest cause was inattention or distraction, which accounted for eightoutoften (41%) of driver-related accidents. Road accidents continue to injure and kill a huge number of people each year, and in order to address this, automakers are adding features to their vehicles that will assist the driver while they develop even more sophisticated technology that will make vehicles fully self-driving in all situations and conditions.

Drivers have one primary role – to observe and assess what is going on, both on and close to the road, and then react, which typically involves adjusting direction or speed. In doing this, the driver is (hopefully) considering a number of factors including obstructions (actual and potential), road signs, speed limits, other road users and the weather. For a vehicle to be able to take on the role of driver, either fully or in part, it must be able to perform the observance and reaction at least as well as a human and, ideally, better.

Automakers are now adding varying amounts of advanced driver assistance systems (ADAS) to vehicles – and not just the top-of-the-range models. As a result, modern vehicles are bristling with sensors of various types. The fastest-growing area of sensing is vision-based sensing, as powerful and capable systems supplement more basic systems such as reversing cameras.

Principal Applications for Vehicle Cameras

One of the more significant innovations is the forward-looking camera, as this is where the driver should be looking most of the time. These are becoming relatively common and, when combined with image analysis software, they can detect obstacles in the path of the vehicle. If there is a steering sensor present, the ADAS knows the vehicle trajectory and can determine whether avoiding action is required. More sophisticated ADAS can predict the path of objects such as pedestrians or other vehicles and determine if their trajectory will intersect with the vehicle’s own trajectory. Once objects and trajectories are determined, the ADAS can react, by issuing a warning to the human driver, applying the brakes or even instigating avoiding action.

Multiple cameras are being added to vehicles, especially larger vehicles such as SUVs. With several cameras, these vehicles can provide a virtual 360-degree view all around the vehicle while maneuvering. This can be valuable for preventing scratches and small dents that are expensive to repair, but the real value is enabling the driver to see if something has moved into the path of the vehicle, such as a small child or a pet.

Many countries are getting tough on speeding, with fines increasing, and excess speed has been shown to be a significant contributor to accidents, leading to serious injury or even death. Using the existing forward-facing camera or possibly a dedicated camera, vehicles are now capable of recognizing and reading roadside speed limit signs. Many ADAS will display a replica of the sign in the driver’s line of vision, using a heads-up display (HUD) if one is fitted. Other systems may sound an audible alert if the speed limit is exceeded, and more sophisticated implementations can link into the cruise control system to cap the speed of the vehicle to the posted limit.

Many countries are getting tough on speeding, with fines increasing, and excess speed has been shown to be a significant contributor to accidents, leading to serious injury or even death. Using the existing forward-facing camera or possibly a dedicated camera, vehicles are now capable of recognizing and reading roadside speed limit signs. Many ADAS will display a replica of the sign in the driver’s line of vision, using a heads-up display (HUD) if one is fitted. Other systems may sound an audible alert if the speed limit is exceeded, and more sophisticated implementations can link into the cruise control system to cap the speed of the vehicle to the posted limit.

Vehicle lighting is changing to LED technology, as is roadside lighting, to deliver more reliable light with lower power consumption. These systems are pulsed, meaning that the light flickers. Humans cannot detect this due to their inherent persistence of vision, but when an image is captured by an image sensor dark bands appear, making text and symbols hard to recognize. Vision systems often incorporate flicker-mitigation techniques such as rapidly taking several images and combining them into a composite image that can be read easily, allowing objects in LED light to be read as easily as those in natural light.

Vehicle cameras are also starting to look inwards, within the cabin. With driver distraction being a primary cause of accidents, imaging technology is being used to monitor the driver for alertness. The camera is pointed at the driver’s face, and image recognition software combined with artificial intelligence (AI) algorithms monitors the driver’s eye and head position to ensure they are being attentive, looking forward and checking mirrors regularly. If the driver appears to be tiring, a rest break can be recommended – and in extreme cases, the ADAS can intervene and bring the vehicle to a safe halt. Should everything go wrong, the camera can judge the height and weight of the driver and ensure optimal deployment of the airbag.

While the systems are primarily designed for safety, they can also contribute to convenience and comfort. Simple face recognition can tell who the driver is and adjust the cabin settings (mirrors, seats, steering wheel, etc.) to their favored positions. More sophisticated systems can monitor all vehicle occupants and deduce if they are comfortable – for example, if they look too hot or too cold the climate can be automatically adjusted.

Technologies for Automotive Imaging

Automotive technology is developing rapidly, probably faster than ever before. Vehicles are becoming mobile computing systems, with many processors and a huge electronics content. To connect all these devices together, specialist versions of Ethernet are replacing older technologies such as CAN bus. As a result, vehicles have far more bandwidth available, enabling the use of higher-resolution image sensors with higher frame rates that increase image quality and improve safety.

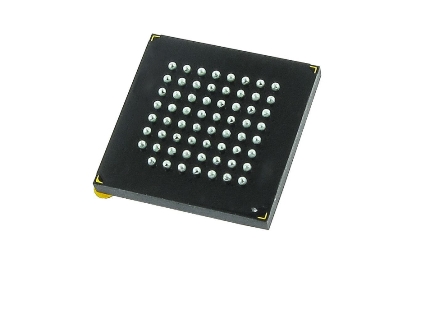

Figure 1: The AR140AT image sensor from ON Semiconductor.

Figure 1: The AR140AT image sensor from ON Semiconductor.

One of the latest image sensors to reach the market for automotive imaging is ON Semiconductor’s AR140AT – a 1/4-inch 1.0-megapixel CMOS device with an active pixel array of 1280×800. The device has been designed for use in the poor light conditions that often occur when driving, offering inbuilt high dynamic range (HDR) ability. Capable of both still images and 60fps video, the AR140AT produces incredibly sharp and clear images in either mode. The device is PPAP capable and has been qualified to AEC-Q100 for automotive applications.

As vehicles rely more on technology and become more connected, so security of their systems becomes paramount. The FS32V234 contains a CSE with 16kB of on-chip secure RAM and ROM, and a system JTAG controller, and can support ARM’s secure TrustZone architecture. The device is also compatible with automotive safety integrity level (ASIL), giving functional safety capability. To ease the qualification process a full FMEDA report is offered, along with a safety manual.

Gesture-Based Vision Systems

With all of the systems in modern vehicles, interfaces are becoming more complex, relying on a plethora of buttons or complex LCD screens with multiple layers of menus. As an alternative, some automakers are considering the use of gesture-based controls so that interiors can be simplified and safety is enhanced, as drivers do not need to take their eyes off the road to look for a switch or scroll through menus.

Melexishas developed a chipset that uses time-of-flight (ToF) technology to deliver a gesture-based HMI. The chipset comprises two devices, the MLX75023 1/3-inch optical-format ToF sensor, and the MLX75123 companion IC, that together enable designers to develop a 3D vision solution. Melexishas also made an evaluation module available to enable designers to rapidly become familiar with the technology. The highly integrated solution removes the need for external devices such as FPGAs, thereby lowering BoM cost significantly and reducing the space taken by the solution as well as shortening design cycle times.

Figure 2: Evaluation board from the MLX75023 ToF.

Figure 2: Evaluation board from the MLX75023 ToF.

Melexis’s advanced pixel technology gives the MLX75023 ToF sensor HDR capability, allowing it to work when light conditions are poor, for example at night or in bright sunlight. The companion chip (MLX75123) directly links the sensor to a host controller, allowing relevant data to be read quickly. As the system is modular, the sensor module can be upgraded in future for greater resolution without requiring a large redesign.

Conclusion

Vehicles have more technology than ever before as automakers strive to improve economy and convenience while also enhancing safety. Sensors are now everywhere in vehicles to provide the basic information needed by ADAS to support the driver and, in the future, replace most or all of their role.

Image sensors offer a huge amount of flexibility with their ability to recognize and read road signs, as well as detect objects and their trajectory. As image sensing technology has improved significantly in recent years, these devices now have the resolution and frame rates required for high-quality imaging, while improvements to the fundamental pixel technology allow this quality to be maintained even in the poor lighting that is often associated with driving.