Mark Patrick, Mouser Electronics

Between 20 and 50 million people suffer non-fatal injuries on the world’s roads each year, while more than 1.2 million people unfortunately die, according to a report by the World Health Organization (WHO). If we continue on this trajectory, road traffic accidents will become the fifth-largest cause of death by the year 2030. Clearly this has to be addressed – and, while initiatives are in place, many organizations including WHO believe that the changes are happening too slowly.

Driver distraction is cited as the main contributing factor in more than four in five accidents. The growing use of cell phones that tempt drivers to text or call while driving, even though this is illegal in many countries, has contributed significantly to this number. Cell phones are a significant factor in 1.6 million accidents in the USA each year, according to the US Department of Transportation (DoT). As a result of cell phone usage, 500,000 people are injured and 6,000 are killed.

It seems that legislation is not the solution to this issue, as too many ignore the existing laws – but as a society, we do need to find a solution. Better driver training may go part of the way but, as design engineers, should our contribution be to make smarter vehicles that will prevent humans doing dangerous things?

While fully autonomous vehicles may be on the horizon, semi-automation has arrived in the form of advanced driver assistance systems (ADAS). Until recently reserved for high-end vehicles, advancements in sensing technology and reductions in deployment costs have seen these systems added into entry-level models. ADAS is seen as a key step on the way to autonomous vehicles, and an important contributor to road safety.

ADAS is big business; Global Market Insights sees the market more than doubling (from USD 28.9 billion to USD 67 billion) between 2017 and 2024. It also recognizes that this may be a conservative estimate, as increasing legislation around vehicle safety is mandating more and more safety features, including reversing cameras and autonomous emergency braking that will grow the market more rapidly.

Vison is Vital

ADAS is able to control vehicle steering, braking and acceleration – either alone, or in conjunction with a driver. To do this, it needs to sense the environment around the vehicle, and this usually involves a combination of several technologies including ultrasonics, radar, image sensors, infra-red (IR) imaging and/or LiDAR. Image sensing is growing in the automotive sphere, both for external vision and more recently within the cabin. A recent Gartner study estimates that external sensors alone will drive a market value of USD 1.8 billion by 2022.

ADAS costs have reduced through the development of improved camera technology, meaning that it is now being fitted to mid-range and even entry-level vehicles. While ADAS systems comprise many sensors, the importance of cameras is increasing as they are used for more functions than ever before. Outward-looking cameras can provide a multitude of functions in conjunction with the appropriate algorithms. As well as detecting obstacles such as other vehicles and pedestrians, they are often key parts of systems that monitor lane discipline, assist with parking, read and display speed limit signs, monitor blind spots and provide rearward visibility when reversing.

Statistics show that most accidents are caused by driver inattention, so one of the fastest-growing areas of ADAS is prover monitoring via an inward-facing camera. Here, the ADAS system checks that the driver is alert and not distracted, or if the ADAS system will have to take a greater role in ensuring safety. The camera will monitor the driver’s eyes for signs of blinking that indicate fatigue, as well as ensuring that they are facing the road ahead (and not gazing into a smartphone, for example).

Vehicles are often used in less-than-ideal conditions, and ADAS systems must ensure safety at all times. ON Semiconductor is at the forefront of automotive imaging technology, and is developing sensors that perform well in low-light and high-contrast situations. Its CMOS AR0230AT has a 1/2.7-inch sensor with a 1928×1088 active-pixel array. It can capture images conventionally or in high dynamic range (HDR) mode using a rolling-shutter readout. Capable of both video and single-frame operation, the sensor includes functionality such as in-pixel binning and windowing. Suitable for both low-light and high-contrast use, the sensor is designed to be energy efficient and is programmed via an easy-to-use two-wire serial interface.

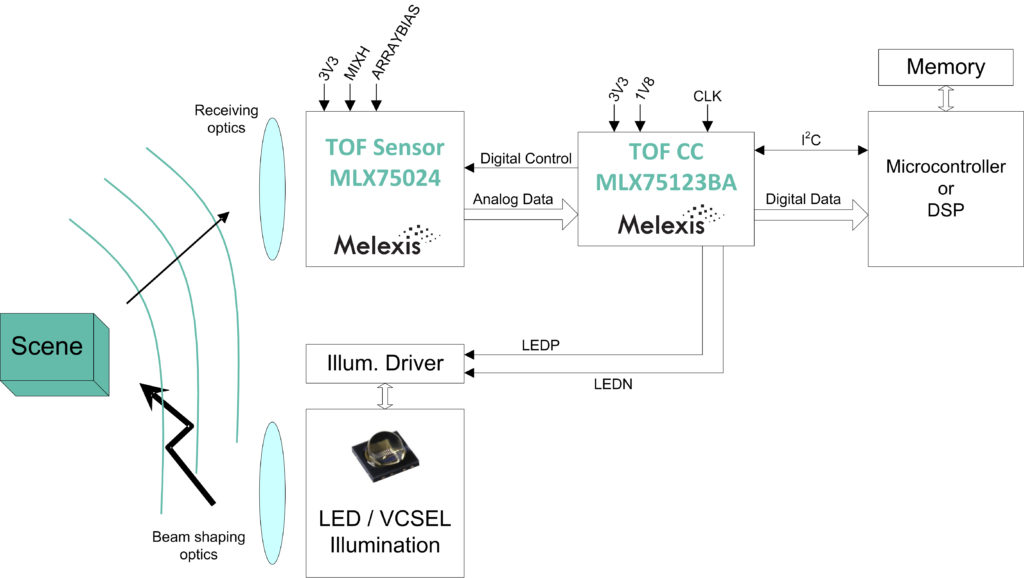

Figure 1: Melexis ToF imaging chipset.

Figure 1: Melexis ToF imaging chipset.

Looking inside the cabin is a key role for Melexis’s MLX75x23 time-of-flight (ToF) 3D imaging solution, which can measure the position of an object in space, such as a driver’s hand making a control gesture. Suitable for use in harsh lighting, the companion chipset features a 320×240 QVGA sensor based on Melexis’s DepthSense pixel technology. Accompanying the sensor is the MLX75123, which controls the ToF sensor and illumination as well as providing an interface to the host processor. The system is AEC-Q100 qualified for automotive applications. Optimized for in-cabin use, the ToF solution offers flexibility and performance in a compact footprint.

Cars Learn to See better than Humans

Detecting that there is something in front of the vehicle is one thing; determining what it is can be quite another. Sensor data is processed and analyzed by sophisticated vision processors to recognize people, movement, patterns, obstacles and important street signs so that the ADAS system can take appropriate action. One such device is NXP Semiconductors’ S32V234 vision and sensor fusion processor IC, specifically intended for this type of processor-centric image processing and analysis. The powerful device has an embedded image sensor processor, dual APEX-2 vision accelerators, a high-performance graphic processing unit (GPU) and security functions. As this processor is included within the NXP SafeAssure program, it can be used in ADAS applications that require functional safety that is compliant with ISO 26262 ASIL B – including lane departure warning, traffic sign recognition, smart headlamp control and pedestrian recognition.

The S32V234 is based on quad 64-bit ARM Cortex-A53 cores that run at speeds of up to 1GHz. It also incorporates a NEON co-processor and an ARM Cortex-M4 processor that provides a sophisticated external interface.

Another device in this vein is the TDA3x SoC series from Texas Instruments, which is also aimed at ADAS applications. The devices blend low power consumption with high performance, as they are capable of processing signals at 745MHz. The small form-factor SoCs support full-HD video at 1920×1080 resolution at 60fps and allow for multiple vision-oriented functions including front camera, rear camera, surround view, radar and sensor fusion.

Figure 2: S32Rx radar MCUs from NXP.

Figure 2: S32Rx radar MCUs from NXP.

Long-Range Radar

Long-range radar with high resolution capability will be a key element of future safety-critical systems and will require smart microcontrollers to achieve this. Enabling this, NXP’s S32Rx radar MCUs are 32-bit devices that are capable of the intensive processing necessary for modern beam-forming fast chirp modulation radar systems, featuring radar I/F and processing, plus dual e200z cores and capacious system memory. The devices are AEC-Q100 grade 1 qualified for automotive applications, and are offered in 257 MAPBGA packages. Typical applications will include those mandated by governments including adaptive cruise control, autonomous emergency braking and rear traffic crossing alerts.

Full Autonomy: The Future

We are still at the start of the journey to full autonomy, at least in terms of vehicles available for the public to buy. The US-based Society of Automotive Engineers (SAE) mapped out six levels of automation, ranging from no autonomy at all (0) to full autonomy (5). Even the most advanced commercially available vehicles can only be classified as level 2.

Even with all of the advances in vision systems and other technologies, fully autonomous vehicles remain some way away. Progress is hard to predict, although some estimates believe that 15 years will elapse before level 5 vehicles reach the market. However, the pace of development is rapid, and many OEMs are deploying sub-systems, all of which contribute to achieving this goal. Research firm ABI Research predicts that by 2025 there will be 8 million level 3 and 4 vehicles shipping annually. While these vehicles will still need a driver, many safety-critical functions will be fully automated, under the correct conditions.

A completely driverless taxi system is being developed for deployment in Israel this year, with full rollout over a 30-year period. Driven by a consortium of three major players, Volkswagen will supply an electric vehicle as a platform, and Mobileye will be in charge of the automation by adding hardware and software. The fleet management and maintenance will be managed by Champion Motors.

Technology development is not the only driver for automated vehicles. There is also a need for society to become comfortable with the technology. There are also legal topics to be addressed, particularly in terms of liability for accidents when the driver is effectively a passenger. While there are many uncertainties, it is certain that at some point in the future, autonomous cars will be in the majority on our roads.