The data acquisition market is a growing market, expected to witness significant changes in the foreseeable future including the broader adoption of wireless technology. Other factors expected to boost market growth include the growing demand from Asia, the accelerating adoption of Ethernet, and the growing demand for modular instrumentation.

Data Acquisition is simply the gathering of information about a system or process. It is a core tool to the understanding, control and management of such systems or processes. Parameter information such as temperature, pressure or flow is gathered by sensors that convert the information into electrical signals. Sometimes only one sensor is needed, such as when recording local rainfall. Sometimes hundreds or even thousands of sensors are needed, such as when monitoring a complex industrial process. The signals from the sensors are transferred by wire, optical fibre or wireless link to an instrument which conditions, amplifies, measures, scales, processes, displays and stores the sensor signals. This is the Data Acquisition instrument.

In the past Data Acquisition equipment was largely mechanical, using smoked drums or chart recorders. Later, electrically powered chart recorders and magnetic tape recorders were used. Today, powerful microprocessors and computers perform Data Acquisition faster, more accurately, more flexibly, with more sensors, more complex data processing, and elaborate presentation of the final information.

Data Logger

A data logger is an electronic instrument which connects to real world devices for the purpose of collecting information. Data logger can be pictured as a black box recorder in aeroplanes. These data loggers record mainly voice and the plane stats data information.

Real world Devices could include the following:

- Pressure sensors & strain gauges

- low and speed sensors

- Current loop transmitters

- Weather & hydrological sensors

- Laboratory analytical instruments

- And much more…

Evolution in Data Acquisition

Data Acquisition technology continues to evolve, with high speed data interfaces and networking forcing major change to previous practices. Sensitive low level signals can now be left in the field, with just the desired data being returned to a remote computer for analysis. This is the function of a dataTaker data logger or DAQ box, providing the functionality and speed of a DAQ board, adding the standalone capability to process, consolidate and log data for later downloading. A series of data loggers interconnected by a network allows data gathering closer to sensors, for improved signal quality and reduced installation cost.

Electronic data loggers have been integral in data collection since their inception with widespread adoption beginning in the early 1980’s. Data loggers are used in a variety of industries and applications including remote water resource monitoring, weather condition monitoring, machine monitoring, gas and oil projects, building HVAC control, and structural vibration monitoring in bridges and buildings.

A data logger’s primary purpose is to automatically collect data points from sensors and make these data points available for review, analysis, and decision making processes. Many data loggers can also be programmed for other purposes, such as alarm and control conditions.

The microprocessor-based data logger was a revolutionary data acquisition tool that replaced most of the historical mechanical-based paper chart and punch tape recorders, which required either manual data input from the charts or scanned data using special equipment that could create a digital file from the paper record.

The data acquisition industry may be at the beginning of another revolutionary data acquisition change with the advancements of the Internet’s Cloud Computing.

Introduction to Cloud Computing on the Internet

“Cloud computing” is a relatively new term, but the concept has been a long-held vision of Internet application developers. Now this vision is a reality and is rapidly growing in terms of availability and acceptance. Embracing the concept of cloud computing is a paradigm shift on how software is accessed and where relevant data is collected, stored, and processed.

Broken down to its simplest form, the Internet is simply a network of computers (called servers) that are accessed to store vast amounts of data and display such data in web sites, send and receive email, share photos, distribute movies, music, games, and any other on-line activities.

Cloud computing is the concept of moving the localized computer processing, programs, and data to an Internet server for easier and more secure access. An analogy is every home and business having its own localized electric power generation plant versus a remote, centralized power generation plant that services multiple homes and business. Today, the power plants are the remote server farms and the transmission and distribution of data are the power lines. The trend is towards purchasing a data plan service from maintaining powerful computing hardware and software programs for each location.

Benefits of cloud computing include real-time access of information, scalability of processing power as the demand load increases, less risk of down-time compared to localized networks, and direct interaction with other web services – thereby enhancing the quality and relevance of the information for better informed decision making.

Software can be written to run “on the cloud” in much the same way as Microsoft Office runs its MS Office applications on a personal computer or localized network server. Rather than running the software locally, the software and related data files are stored on a remote server accessible on the Internet. Many large companies run cloud computing services, such as Amazon’s S3 service, Google’s App Engine, and Microsoft’s Windows Azure platform.

Market and Growth – Global Outlook

With industrial development widespread in Asia-Pacific, Latin America and the Middle East, the data acquisition market sees rapid growth in these regions in coming years. New factories built with most up-to-date technology including wireless networking and industrial wire line networks; there is no legacy to replace, so customers will be deploying new technology.

New applications of sensors in oil & gas, medical equipments, and pharmaceutical manufacturing will help fuel data acquisition solutions market. The concept of big analog data, with wireless and wire-line sensors capturing reams of data from manufacturing floor, refineries, oil fields, and the like is becoming reality. Data acquisition solutions suppliers offering the best solution will enjoy robust growth.

Industry Outlook on DAQ key trends, technologies and applications

Spokesperson Details:

Application Engineer, Electronic Measurement Group, Agilent Technologies India Pvt. Ltd.

Technical Marketing Manager, National Instruments, India

Outlook on Data Acquisition Industry, key trends and emerging applications

Navjodh Dhillon @Agilent Technologies India:

Modern Data Acquisition (DAQ) systems take advantages of the existing technology in computing and software for performance and capabilities. The computer technology has grown by leaps and bounds in the past decade and it has positively impacted data acquisition systems as well and will continue to do so.

Increased processing power allows us to run more complex operating systems and control software, allowing more advanced capabilities in DAQ systems. It is also possible for many data acquisition systems to run standalone without an external PC and automatically collect data or control an external system once it is configured to do so. This could a be temperature controlled chamber where the data acquisition system continuously monitors the temperature and switches a fan or a heater based on set temperature conditions. Furthermore it could generate alarms on certain conditions.

PC interface bus technologies have been continuously improving with focus on increased data throughput and reliability. Today we have a variety of options to connect a data acquisition system starting with USB 3.0, 1 Gigabit and 10 Gigabit Ethernet, PCI Express generation 3 and now 4, offering unprecedented data throughputs and reliability still at a reasonable cost, addition to existing standards such as GPIB, LAN, USB 2.0, PCIe 1.0 and 2.0 and others. Cost is slated to go down further as the adoption of newer standards increases.

Same is the case with wireless standards, with the advent of LTE, WLAN 802.11ac, Bluetooth 4.0, etc. in addition to existing wireless and cellular standards such as WLAN, Bluetooth 2.0/3.0, Zigbee and GSM, 3G, we now have a multitude of connectivity options and data throughput is not a limitation for wireless connectivity links today. With improvement in wired and wireless connectivity standards Remote data acquisition applications have also received a boost. An example could be a data acquisition system deployed in a nuclear reactor where it can continuously acquire and transmit large amounts of data over wired or wireless links without the need of human intervention.

Data acquisition systems can now be used as the application demands, wired or wireless without connectivity links being a limitation.

Mobile devices is another area which has grown in adoption and it was only a matter of time before data acquisition It is now possible to remotely connect to data acquisition systems and analyze and view data on the go. Most mobile devices also have many kinds of data acquisition systems built in. Examples are Accelerometers, Compass, Gyroscopes, Temperature sensors, Ambient light sensors etc which further multiply the possibilities of how these systems can be used and how they will evolve in future. Active development is expected to continue in this area and we will see data acquisition impacting our life even more than before.

Data Acquisition systems today are more in sync with the existing technology, in both hardware and software, than ever before and will continue to do so for the foreseeable future.

Satish Mohanram @National Instruments, India:

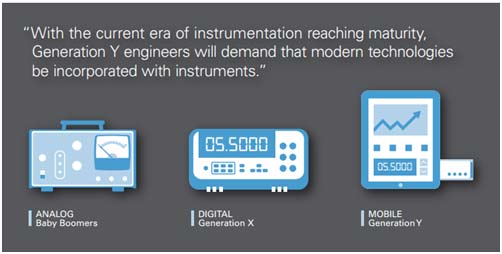

Each generation of engineer has seen new generations of instrumentation. Baby Boomers (born in the 1940’s to 1960’s) used cathode-ray oscilloscopes and multimeters with needle displays, now commonly referred to as “analog” instruments. Generation X (born in the 1960’s to 1980’s) ushered in a new generation of “digital” instruments that used analog-to-digital converters and graphical displays. Generation Y (born in the 1980’s to 2000’s) is now entering the workforce with a new mindset that will drive the next generation of instrumentation.

Generation Y has grown up in a world surrounded by technology. From computers, to the internet, and now mobile devices, this technology has evolved at a faster rate than ever before. A recent report from Cisco delved into the nature of Generation Y and their relationship with technology:

- Smartphones rated twice as popular as desktop PCs

- 1/3 of respondents check their smartphones at least once every 30 minutes

- 80% use at least one app regularly

- Two out of three spend equal or more time online with friends than in person

Generation Y is obsessed with technology. They embrace change and quickly adopt new technologies because they understand the benefits that they provide. The innovation in consumer electronics, which Generation Y engineers use in their daily lives, has outpaced the instruments they use in the professional setting. In fact, the form factor of benchtop instruments has remained mostly unchanged over the years. All components – display, processor, memory, measurement system, and knobs/buttons – are integrated into a single, stand-alone device.

With the current era of instrumentation reaching maturity, Generation Y engineers will demand that modern technologies be incorporated with instruments. Instrumentation in the era of Generation Y will incorporate touchscreens, mobile devices, cloud connectivity, and predictive intelligence to provide significant advantages over previous generations.

Technologies and methodologies impacting the data acquisition industry

Navjodh Dhillon @Agilent Technologies India:

At the most basic level data acquisition is digitization of data using an Analog to Digital Converter (ADC) and then processing, storage and/or analysis on a either the acquisition system itself or an external PC. Today, ADC technology has grown by leaps and bounds and is not the only limiting factor for data acquisition system capabilities.

Hardware is important in a data acquisition system, for example, the resolution and dynamic range of an ADC will to a large extent impact how correct the digital representation of acquired data is compared to the actual analog input. At the same time, it is critical to have software capabilities that allows us to make efficient use of the hardware. Also, the connectivity interface itself should support data transfer rate to sustain transfer of data from the ADC to the device memory or extern PC memory. After data collection, large amounts of data poses its own challenge, the primary concern being segregating the useful data from the complete data set.

Having a global community allows customer use best practices and have more confidence on the measurement results, get their issues resolved faster.

Increased processor capability, improved connectivity standards and influence of mobile devices would continue to make data acquisition systems more reliable and flexible.

Satish Mohanram @National Instruments, India:

Touchscreen – Data Acquisition

As instruments have added new features, they’ve also added new knobs and buttons to support them. However, this approach is not scalable. At some point, the number of knobs and buttons becomes inefficient and overwhelming. Some instruments have resorted to multi-layered menu systems and “soft buttons” that correspond to variable actions, but the complexity of these systems have created other usability issues. Most Generation Y engineers would describe today’s instruments as cumbersome.

An instrument that completely ditches physical knobs and buttons, and instead uses a touchscreen as the user interface, could solve these challenges. Rather than presenting all of the controls at once, the touchscreen could simplify the interface by dynamically delivering only the content and controls that are relevant to the current task. Users could also interact directly with the data on the screen rather than with a disjointed knob or button. They could use gesture-based interactions such as performing a pinch directly on the oscilloscope graph to change the time/div or volts/div. Touchscreen-based interfaces provide a more efficient and intuitive replacement for physical knobs and buttons.

Mobile-Powered

By leveraging the hardware resources provided by mobile devices, instruments can take advantage of better components and newer technology.

This approach would look very different from today’s instruments. The processing and user interface would be handled by an app that runs on the mobile device. Since no physical knobs, buttons, or a display would be required, the instrument hardware would be reduced to only the measurement and timing systems, resulting in a smaller size and lower cost. Users wouldn’t be limited by the tiny built-in displays, small onboard storage, and slow operation. They could instead take advantage of large, crisp displays, gigabytes of data storage, and multi-core processors. Built-in cameras, microphones, and accelerometers could also facilitate new possibilities such as capturing a picture of a test setup or recording audio annotations for inclusion with data. Users could even develop custom apps to meet specialized needs.

While it’s entirely possible for traditional instruments to integrate better components, the pace at which this can happen will lag mobile devices. Consumer electronics have faster innovation cycles and economies of scale, and instruments that leverage them will always have better technology and lower costs.

Cloud-Connected

Engineers commonly transfer data between their instruments and computers with USB thumb drives or with software for downloading data over an Ethernet or USB cable. While this process is fairly trivial, Generation Y has come to expect instantaneous access to data with cloud technologies. Services like Dropbox and iCloud store documents in the cloud and automatically synchronize them across devices. Combined with WiFi and cellular networks that keep users continuously connected, they can access and edit their documents from anywhere at any time. In addition to just storing files in the cloud, some services host full applications in the cloud. With services like Google Docs, users can remotely collaborate and simultaneously edit documents from anywhere.

Instrumentation that incorporates network and cloud connectivity could provide these same benefits to engineers. Both the data and user interface could be accessed by multiple engineers from anywhere in the world. When debugging an issue with a colleague who is off site, rather than only sharing a static screenshot, engineers could interact with the instrument in real time to better understand the issue. Cloud technologies could greatly improve an engineering team’s efficiency and productivity.

Intelligent

Context-aware computing is beginning to emerge and could fundamentally change how we interact with devices. This technology uses situational and environmental information to anticipate users’ needs and deliver situation-aware content, features, and experiences. A popular example of this is Siri, a feature in recent Apple iOS devices. Users speak commands or ask questions to Siri, and it responds by performing actions or giving recommendations. Google Now provides similar functionality to Siri, but also passively delivers information that it thinks the user will want based on geolocation and search data: weather information and traffic recommendations appear in the mornings; meeting reminders are displayed with estimated time to arrive at the location; and flight information and boarding passes are surfaced automatically.

Similar intelligence when combined with instrumentation could be game-changing. A common challenge engineers face is attempting to make configuration changes to an instrument at the same time that their hands are tied up with probes. Voice-control could not only provide hands-free interaction, but also easier interaction with features. In addition, predictive intelligence could be used to highlight relevant or interesting data. An oscilloscope could automatically zoom and configure based on an interesting part of a signal or it could add relevant measurements based on signal shape. An instrument that leverages mobile devices could integrate and take advantage of context-aware computing as the technology is developed.

Agilent Data Acquisition Products

Agilent product lines primarily cover the following:

- LXI based modular data acquisition systems such as 34972A and 34980A

- USB based modular data acquisition systems

- PXI based modular data acquisition systems

- PCI/PCIe/cPCI based modular data acquisition cards.

In addition to the hardware, each comes with a GUI to help the user get started quickly without any programming knowledge. If programming is needed all Agilent data acquisition systems provide a SCPI interface and also a standard driver that allows interfacing with all common programming languages.

One benchmark of how flexible, versatile and powerful a data acquisition system is, is the number of ways in which it can be used. Agilent takes a modular approach to data acquisition which allows maximum flexibility to the customer to configure the data acquisition system exactly as per their requirement. Our customer applications are testimony to this fact as we see these systems being used in a spectrum of applications right from mobile manufacturing to big physics.

Some of the typical data acquisition application domains are: Automotive testing, Manufacturing test, Physics Research, Automation and control, temperature monitoring, parametric test and evaluation, to name a few.

Agilent data acquisition systems also have the capability of connecting with mobile and tablet devices including Android and iOS devices. Even the basic Agilent Handheld DMMs come with an optional Bluetooth adapter to communicate with a mobile device for viewing and logging data remotely.

Agilent has consistently incorporated the latest technologies into their systems which includes newest processors, operating systems and connectivity interfaces and will continue to do the same to provide the best value and to protect customer investment.

NI Data Acquisition Products

With more than 30 years of experience and 50 million I/O channels sold, National Instruments DAQ is the most trusted computer-based measurement hardware available. Innovative DAQ hardware and NI-DAQmx driver technologies give you better accuracy and maximized performance. Regardless of the application, whether for basic measurements or complex systems, NI has the right tools for the job. The breadth and depth of the National Instruments product offering is not available from any other vendor. National Instruments DAQ devices are offered on a variety of common PC-buses including USB, PCI, PCI Express, PXI, PXI Express, Wi-Fi (IEEE 802.11), and Ethernet, with a wide spectrum of measurement types such as voltage, current, temperature, pressure, or sound. Some of the key features that these products have are –

- Integrated signal conditioning

- High channel density

- Modular scalable architecture

- Easy to install and use

- Advanced driver support – Single set of API for all buses

- Support for C, C++, LabVIEW, Visual Studio .NET, Matlab etc…

- Capability to leverage the benefits of PC technology.

- Traceable accuracy

- NI STC 3 for enhancing streaming, logging and synchronization

- Support for Windows, Linux operating systems.